ChatGPT has become popular to the extent that it can be called a household brand in a span of one year and a half. The algorithms that work behind this powerful AI tool has actually been used in powering many other apps and services. But have you ever wondered how these algorithms work?

Well, if you are curious to know how ChatGPT and other such tools work, then it is important to understand the functioning of the language engine that serves as its building block.

Let’s break it down with an example! Suppose, you are having a conversation with a machine where you give a query or a prompt, and it provides you with the relevant responses or information. This process is known as Prompt Engineering. Looks simple, right? But that’s not the whole story. It’s about framing the right questions and instructions to direct AI models, particularly Large Language Models or LLMs, so they give you the desired outcomes.

Currently, the two most common LLMs are GPT-3.6 and GPT-4. However, we can expect that there will be a significant increase in competition in the coming years.

Whether you’re a computer geek excited to explore the latest in AI or work with LLM professionally, understanding the whole concept of AI prompt engineering is crucial.

As we read through the following article, there are a lot of technical complexities of prompt engineering for ChatGPT that will be demystified. So, let’s get going!

What is ChatGPT?

ChatGPT is a chatbot developed by OpenAI, based on the GPT (Generative Pre-trained Transformer) language models. These models can perform various tasks such as:

- Answering questions

- Composing text

- Generating emails

- Engaging in conversations

- Explaining code

- Translating natural language to code

The success of these tasks depends on how well you phrase your questions and prompts.

Key Features:

- Versatility

ChatGPT can help with creative tasks like writing a Shakespearean sonnet about your cat, as well as practical tasks like brainstorming subject lines for marketing emails.

- Data Collection

While users benefit from its capabilities, OpenAI gathers data from real interactions to improve the model. This led to restrictions in Italy in early 2023, which have since been resolved.

- Access to Models

GPT-3.5: Free for everyone, slightly less powerful.

GPT-4: Available to ChatGPT Plus users, with a limit of 25 messages every three hours.

- Context Retention

ChatGPT can remember the context of your ongoing conversation, making interactions more coherent and meaningful. You can also ask for revisions based on previous discussions, making the interaction feel more like a genuine conversation.

ChatGPT demonstrates the power of GPT models in an accessible way, helping users understand their potential without needing a deep knowledge of machine learning.

Also read: What does the AI tool ChatGPT mean for the future of writing?

How Does ChatGPT Work?

ChatGPT works by analyzing your input and generating the most appropriate responses based on its training. While this might sound truthful, there’s a lot going on in the background. Here’s a detailed look at the processes that make it function effectively:

-

Pre-training the Model

ChatGPT starts with a pre-training phase, during which it is trained on an extensive dataset comprising various parts of the Internet. In this phase, the model learns patterns, structures, and relationships within the data.

-

Architecture

ChatGPT uses a transformer architecture, which is recognized for its attention mechanisms. These mechanisms enable the model to grasp long-range dependencies and relationships within the data.

-

Tokenization

The input text passes through tokenization, breaking it down into smaller units like words or subwords. Each token is then assigned a numerical value, creating a sequence of tokens that the model can analyze.

-

Positional Encoding

Positional encoding is incorporated to convey the positional information of tokens within a sequence. This assists the model in understanding the sequential order of words in a sentence.

-

Model Layers

Multiple layers in the model employ self-attention mechanisms to refine input understanding, considering the context of each token in relation to others.

-

Training Objectives

In pre-training, the model predicts the next word based on the preceding context, facilitating unsupervised learning to grasp grammar, semantics, and general knowledge.

-

Parameter Fine-Tuning

After pre-training, the model undergoes fine-tuning through supervised learning on labeled datasets for tasks such as translation, summarization, or question-answering to enhance performance.

-

Prompt Handling

In ChatGPT, users input prompts to receive responses. The model then generates outputs based on learned patterns and context, a skill known as GPT-3 prompt engineering.

-

Sampling and Output Generation

The model produces responses using sampling methods like temperature-based sampling. Higher temperatures yield more random outputs, while lower temperatures result in more deterministic responses.

-

Post-processing

After generation, the output undergoes post-processing to convert numerical tokens to readable text, ensuring coherence and proper formatting in the final output.

-

User Interaction Loop

The repetitive process involves user feedback, and refining the model’s responses. Fine-tuning based on this feedback is essential for improving the relevance and coherence of generated responses.

-

Deployment

Once trained and fine-tuned, ChatGPT can be deployed for diverse applications like chatbots and content generation. It engages in conversations and delivers helpful outputs.

That’s all about a brief overview of ChatGPT! Let’s now take an in-depth overview of the prompt through which we operate ChatGPT in the foreground.

What is a Prompt?

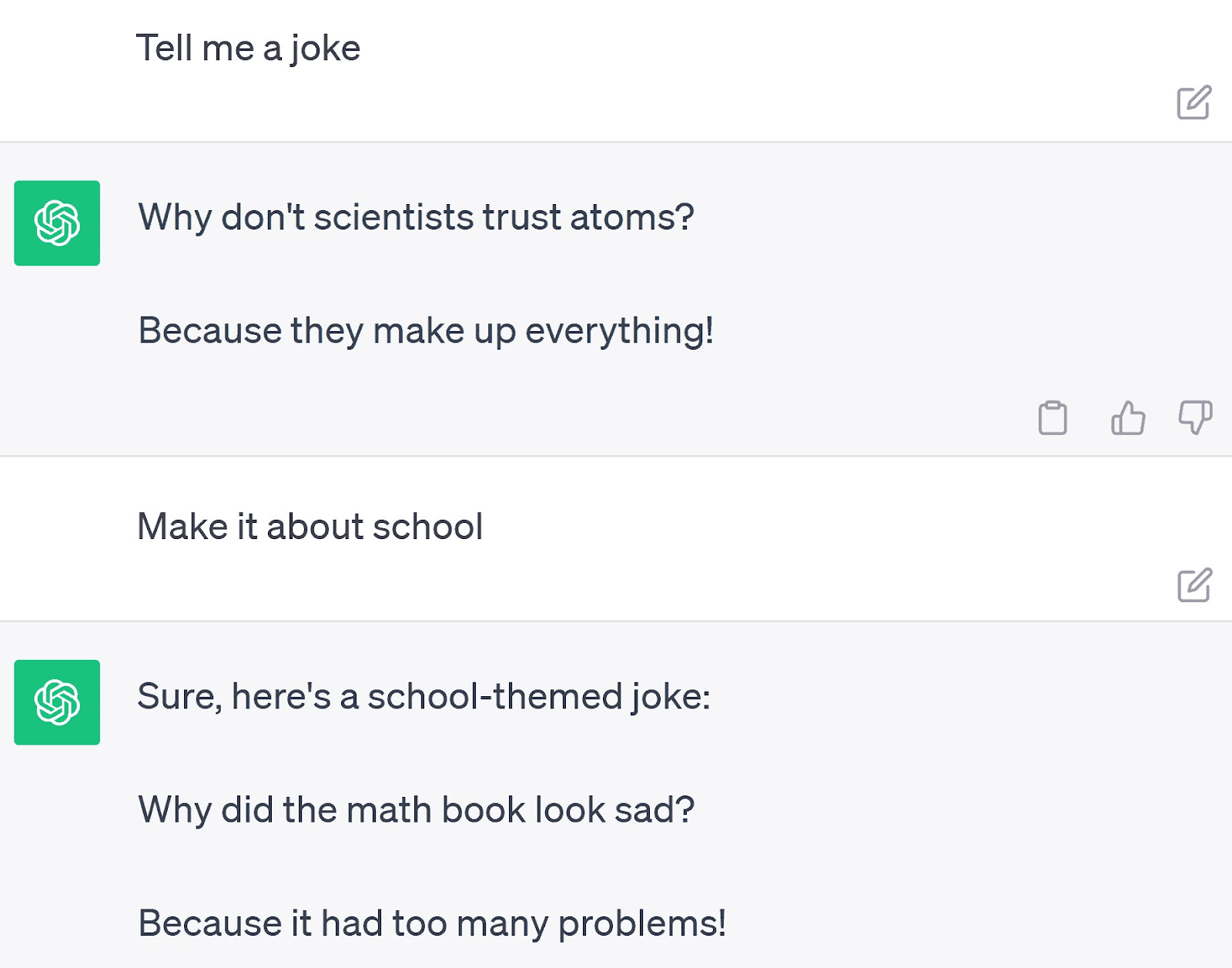

ChatGPT prompts are input commands or questions provided to the artificial intelligence interface to generate responses. These prompts typically contain specific keywords or phrases designed to elicit a conversational reply. Users input questions or instructions to ChatGPT, which then generates responses as if engaging in a conversation.

Eg.,

You can resume the conversation by giving another prompt or directive that comes up on it’s response.

ChatGPT Prompts are a valuable resource for content marketers, helping in the creation of engaging content across various platforms. Through natural language processing, it generates diverse content, including social media posts, lesson plans, herbal remedy explanations, and yoga poses.

With prompt engineering, marketers can craft lists, meta descriptions, guides, and tutorials. Additionally, it assists in programming translations, career guides, RSA format tables, and partnership strategies.

What is Prompt Engineering?

Prompt engineering is similar to guiding a child’s learning through questioning. It involves crafting prompts that direct an AI model, particularly a Large Language Model (LLM), to produce specific outputs. This process is essential for shaping the responses generated by AI models, much like how well-constructed questions influence a child’s thinking.

Definition:

Prompt engineering is about crafting questions or instructions to get desired responses from AI models. It acts as a bridge between human input and machine output. In AI, where models learn from huge datasets, the right prompt is crucial for clear communication.

Example:

When you talk to Siri or Alexa, how you ask for something matters. Saying “Play some relaxing music” is different from “Play Beethoven’s Symphony” and will give you different outcomes.

How did Prompt Engineering Evolved?

Prompt engineering, a relatively new field, is deeply connected to the history of Natural Language Processing (NLP) and machine learning. Here’s how it has evolved over time:

Early NLP efforts: NLP traces back to the mid-20th century when digital computers emerged. Initial approaches relied on manual rules and basic algorithms, struggling with language complexities.

Statistical methods: As computing power grew, statistical techniques gained prominence in the late 20th and early 21st centuries. Machine learning algorithms allowed for more adaptable language models, though still limited in context understanding.

Transformer architecture: The introduction of transformers in 2017 marked a breakthrough. With self-attention mechanisms, transformers like Google’s BERT captured intricate language patterns, transforming tasks like text classification.

OpenAI’s GPT series: GPT models, notably GPT-2 and GPT-3, took transformers to new heights. With billions of parameters, they produced human-like text, emphasizing the need for precise prompts.

Modern-day significance: Prompt engineering for ChatGPT is now vital, ensuring effective use of transformer-based models in various fields. It bridges the gap between powerful AI tools and user needs, from creativity to data science.

The Technical Side of Prompt Engineering

Prompt engineering for ChatGPT involves both language skills and understanding the technical aspects of AI models. Here’s a closer look at the technical aspects:

Model architecture: AI models like GPT are built on transformer architectures, allowing them to understand context. Crafting good prompts means knowing how these models are built.

Training data and tokenization: Models are trained on big datasets and break input into smaller parts (tokens). How data is broken down can affect how a model interprets a prompt.

Model parameters: AI models have lots of parameters that determine their behavior. Prompt engineers need to understand how these parameters affect responses.

Sampling techniques: Models use methods like temperature setting to decide how random or diverse their responses are. Adjusting these techniques can improve response quality.

Loss functions and gradients: These guide a model’s learning process. While prompt engineers don’t directly change them, understanding them helps in predicting model behavior.

How does Prompt Engineering work?

Prompt engineering shapes how AI models respond to user inputs. These models, like ChatGPT, are based on transformer architectures, which help them understand language and process lots of data. With ChatGPT prompt engineering, we guide the AI to generate meaningful and coherent responses.

This involves various techniques like tokenization, adjusting model parameters, and sampling methods. These techniques ensure that the AI understands our prompts well and gives us helpful answers.

Foundation models, which are big language models built on transformer architecture, are the backbone of generative AI. They have all the information needed to generate responses.

Generative AI models work by processing natural language inputs and creating outputs based on them. Models like DALL-E and Midjourney even create images from text descriptions. Effective prompt engineering combines technical knowledge with understanding language, vocabulary, and context to get the best results with minimal revisions.

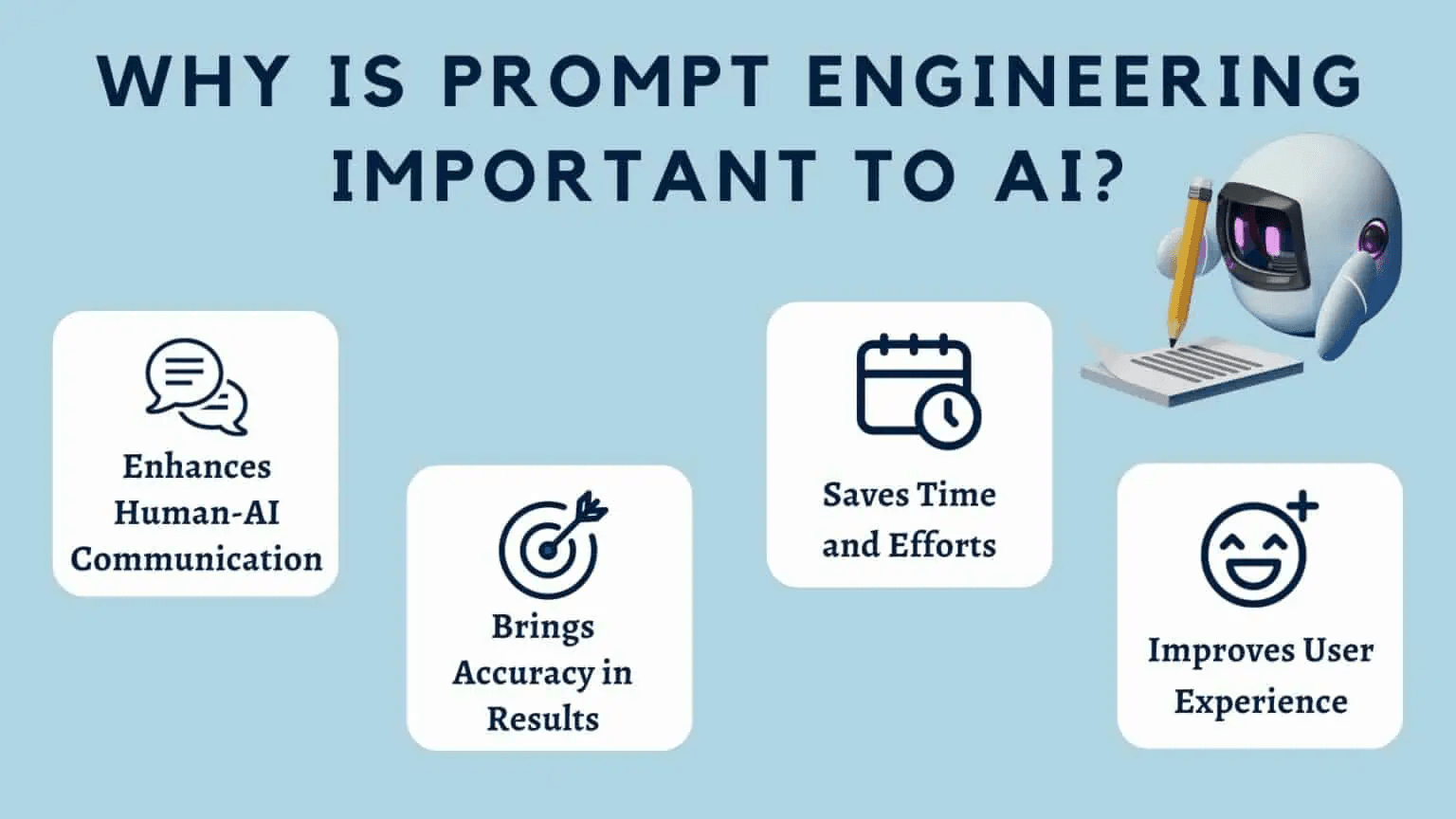

Why is Prompt Engineering So Important?

Prompt engineering skills are increasingly valuable in various roles within the artificial intelligence and machine learning field, despite the lack of specific job titles. These skills are crucial for effectively guiding generative AI models to produce desired outputs. Here’s why mastering prompt engineering for ChatGPT is essential for a successful career in AI.

1. AI Research and Development

ChatGPT engineering skills are major for researchers and developers to fine-tune pre-trained models for specific tasks in AI projects. These skills enable customization of models to achieve desired outcomes in various domains such as natural language processing, image generation, and code generation.

2. Content Generation and Marketing

In content creation and marketing, AI prompt engineering skills are valuable for aligning AI-generated content with brand voice and objectives. This ensures that written content, ad copies, and social media posts effectively represent the brand.

3. Conversational AI and Chatbot Development

Skilled prompt engineers are crucial in the era of chatbots and conversational AI. Their ability to craft prompts ensures coherent and contextually relevant responses, essential for creating user-friendly and effective conversational agents.

4. Data Science and Analytics

In data science roles, professionals utilize generative AI tools for tasks like data synthesis and text generation. Effective prompt engineering for ChatGPT improves model performance and relevance for specific business goals.

5. Ethical AI and Bias Relief

In roles emphasizing AI ethics and fairness, there’s a rising need for prompt engineering skills to mitigate bias and ethical concerns, guiding AI behavior responsibly.

6. Startups and Innovation Hubs

In startup settings and innovation centers, prompt engineering experts play a key role in designing and refining AI-driven products and services, fostering innovation and product optimization.

7. AI Consulting and Solutions Architecture

In AI consulting or solutions architecture, ChatGPT prompt engineering skills are essential for advising clients on implementing generative AI models. Effective prompts ensure alignment with client needs and objectives.

What are the Latest Developments in Prompt Engineering?

In early 2024, prompt engineering is rapidly advancing, shaping how we interact with AI, especially Large Language Models (LLMs). Here’s a look at the latest developments:

-

Improved contextual understanding

LLMs like GPT-4 now excel in grasping complex prompts, considering broader context, and delivering nuanced responses. Enhanced training methods with diverse datasets contribute to this progress.

-

Adaptive prompting

AI models are adapting responses based on users’ input styles, making interactions more natural. This personalization enhances user experience, especially in applications like virtual assistants.

-

Multimodal prompt engineering

AI models can now process mixed inputs of text, images, and audio, mimicking human perception. This advancement opens avenues for more comprehensive AI applications.

-

Real-time prompt optimization

Technology enables AI models to provide instant feedback on prompt effectiveness, guiding users in crafting clearer and bias-free prompts.

-

Domain-specific integration

Prompt engineering integrates with specialized AI models in fields like medicine and finance, enhancing precision and relevance in responses.

These developments signify a shift towards more intuitive, responsive, and tailored AI interactions, driving innovation across industries.

Read more: How is AI Changing the World Around You?

Real-world Applications of Prompt Engineering

Prompt engineering, as generative AI becomes more accessible, finds diverse applications in solving real-world challenges:

-

Chatbots

Crafting effective prompts ensures AI chatbots deliver contextually relevant and coherent responses in real-time conversations, enhancing user experience and interaction quality.

-

Healthcare

Prompt engineering for ChatGPT guides AI systems in summarizing medical data and providing treatment recommendations, enabling accurate insights and personalized healthcare solutions.

-

Software development

Instructing AI models with effective prompts aids in generating code snippets and offering solutions to programming challenges, streamlining development processes and boosting productivity.

-

Software engineering

Utilizing prompt engineering in software development optimizes code generation, simplifies complex tasks, automates coding, and enhances debugging, ultimately improving efficiency and reducing manual effort.

-

Cybersecurity and computer science

Crafting prompts for AI models facilitates the development and testing of security mechanisms, aiding in simulating cyberattacks, designing defense strategies, and identifying software vulnerabilities.

These diverse applications highlight the versatility and impact of AI prompt engineering across various domains, driving innovation and problem-solving in critical areas.

The Art and Science of Crafting Effective Prompts

Creating a successful prompt blends artistic flair with scientific precision. It’s an art, demanding creativity and linguistic insight. Yet, it’s also a science, rooted in understanding how AI models interpret and produce outputs.

The Concept of Prompting

Each word in a prompt holds significance as it shapes the response from an AI model. Even subtle changes in phrasing can result in vastly different outputs. For example, requesting to “Describe the Eiffel Tower” versus “Narrate the history of the Eiffel Tower” will elicit distinct responses. The former may focus on its physical attributes, while the latter may explore its historical context.

In the field of Large Language Models (LLMs), understanding these nuances is crucial. LLMs, trained on extensive datasets, generate responses based on the cues provided. Crafting prompts isn’t merely about asking questions; it’s about formulating them in a manner that aligns with the desired outcome.

Components of an Effective Prompt

Instruction: This outlines the main task for the model, providing clear direction on what you want it to accomplish. For instance, “Summarize the following text” sets a specific action for the model to take.

Context: Contextual information helps the model understand the broader context in which it should operate. For example, “Given the current market conditions, suggest investment strategies” provides background information that guides the model’s response.

Input Data: This is the specific data or information the model should process to generate its response. It could be a passage of text, numerical data, or any other input relevant to the task.

Output Indicator: This element specifies the format or style of the desired response. For example, “Rephrase the following sentence in a formal tone” directs the model on how to structure its output.

Each of these elements plays a crucial role in shaping the model’s understanding and ensuring that it produces the desired output.

Techniques in Prompt Engineering

1. Basic Techniques

Role-playing: Direct the model by assuming specific roles, such as a nutritionist or historian, for tailored responses. For instance, prompt as a nutritionist to evaluate a diet plan for scientifically grounded feedback.

Iterative refinement: Begin with a broad prompt and progressively refine it based on model responses. This iterative approach refines prompts towards desired outcomes.

Feedback loops: Incorporate model outputs to adjust subsequent prompts, ensuring responses better match user expectations with each interaction.

2. Advanced techniques

Zero-shot prompting: It challenges AI models with novel tasks, testing their ability to generalize and produce relevant outputs without prior training examples.

Few-shot prompting, or in-context learning: This provides the model with a few examples to guide its response. By offering context or previous instances, the model can better understand and generate the desired output. For instance, showing translated sentences before asking for a new translation.

Chain-of-Thought (CoT): It guides the model through reasoning steps. Breaking down complex tasks into “chains of reasoning” enhances language understanding and accuracy. It’s akin to step-by-step guidance through a complex problem.

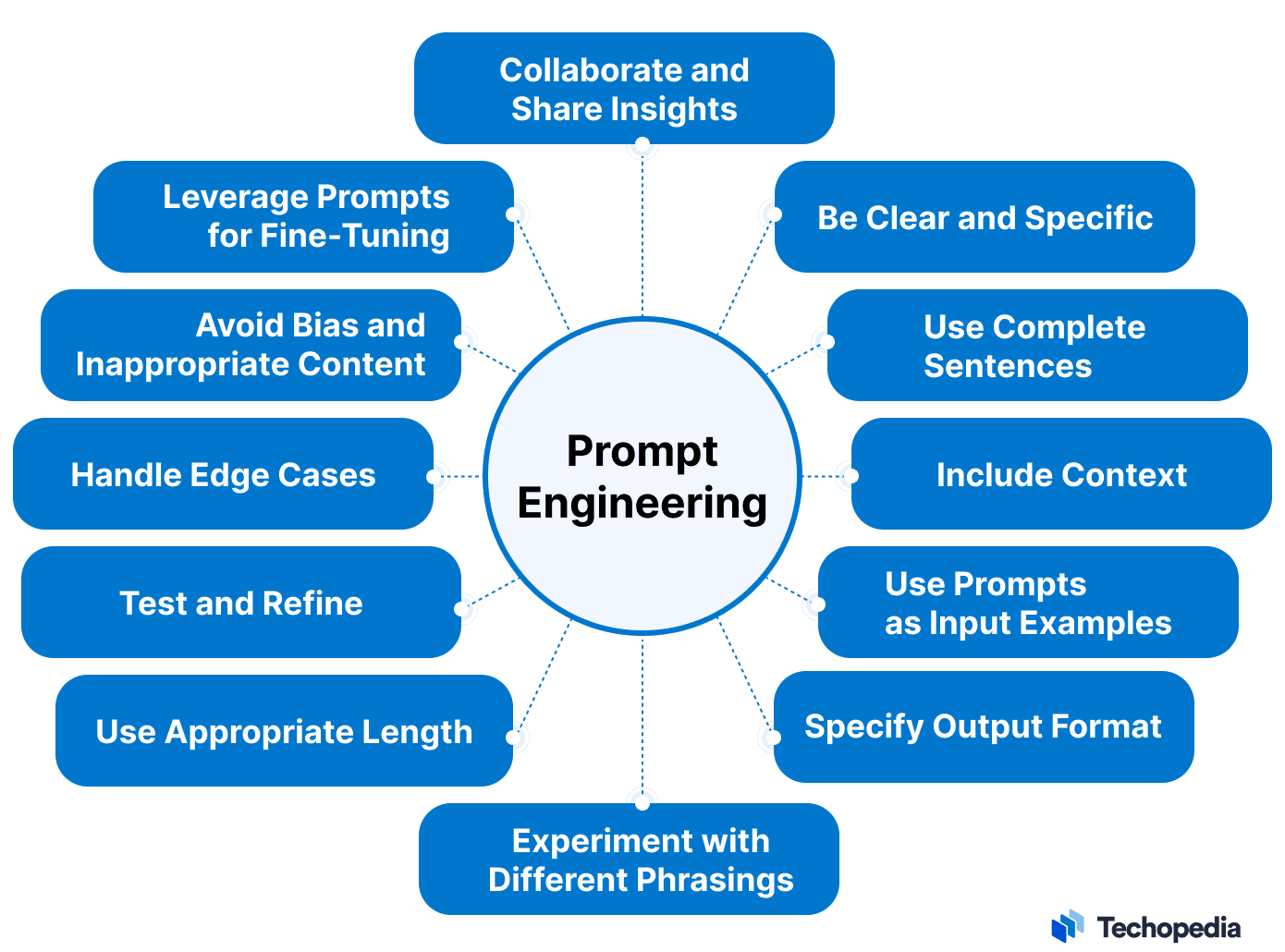

Tips for Creating Effective AI Prompts for Better Outcomes

When users engage with AI tools like ChatGPT, Google Bard, OpenAI’s DALL-E 2, or Stable Diffusion for text-to-text or text-to-image tasks, it’s essential to know how to frame prompts effectively to achieve desired outcomes.

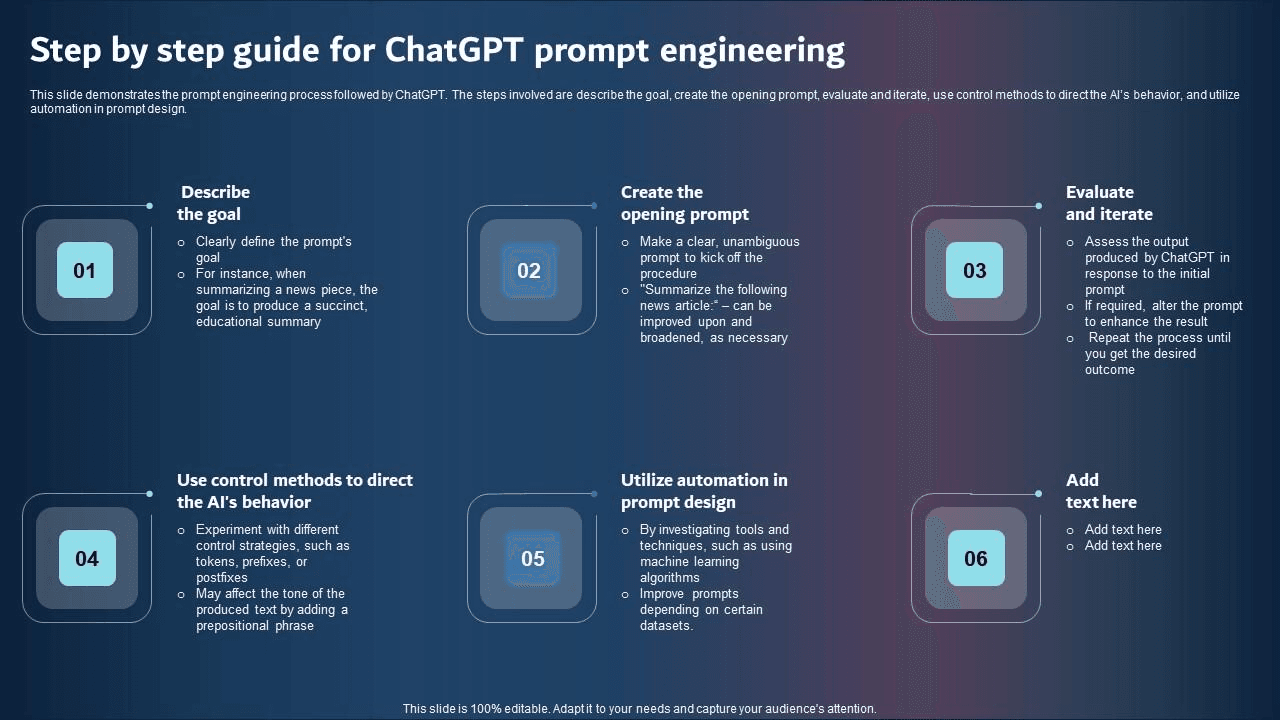

1. Define the Goal

Before writing prompts, users should clearly establish the purpose and intended outcome. Whether it’s generating a concise blog post or an image depicting specific features, clarity on the goal is essential.

2. Provide Specific Details and Context

Effective prompts should include precise instructions and relevant context. Details about desired traits, colors, textures, or aesthetic styles aid the AI model in understanding requirements and producing accurate results.

3. Incorporate Keywords and Phrases

Utilizing relevant keywords and phrases in prompts enhances search engine optimization and helps convey preferred terms effectively.

4. Keep Prompts Concise

While complexity can aid the AI in understanding, prompts should ideally be concise, consisting of three to seven words, to avoid overwhelming the model with unnecessary details.

5. Avoid Contradictory Terms

Clear and consistent language prevents confusion within the AI model. Conflicting terms in prompts may lead to undesired outputs.

6. Ask Open-ended Questions

Open-ended prompts encourage detailed responses, fostering richer content generation. Instead of binary queries, opt for questions that prompt exploration and elaboration.

7. Leverage AI Tools

Numerous AI platforms offer customizable prompt generation, including ChatGPT, DALL-E, and Midjourney. Leveraging these tools streamlines prompt creation and enhances AI-generated content quality.

The Role of a Prompt Engineer

As technology evolves and AI becomes more popular, a critical role has surfaced – the Prompt Engineer. This role acts as a bridge between human intentions and machine responses, ensuring that AI models understand prompts effectively and generate appropriate outputs.

Is Prompt Engineering the Next Big Thing in AI Careers?

With the rapid progress in Natural Language Processing (NLP) and the widespread adoption of Large Language Models (LLMs), a new career path is emerging: AI prompt engineering. These specialists are not just tech-savvy individuals; they are artists who understand language intricacies and AI nuances.

Companies, both established and startups, are increasingly recognizing the importance of prompt engineers. As AI technologies become more integrated into various products and services, prompt engineers ensure that these solutions are not only effective but also user-friendly and contextually relevant.

According to reports from reputable sources like Time Magazine, the demand for prompt engineers is soaring. Job listings on platforms like Indeed and LinkedIn show thousands of openings across the US, with salaries ranging from $50,000 to over $150,000 annually.

What Skills does a Prompt Engineer need?

AI Prompt engineers are in high demand at major technology firms, tasked with developing innovative content, addressing complex queries, and refining machine translation and NLP tasks. Key skills for prompt engineers include familiarity with

- Large language models

- Effective communication

- Technical aptitude

- Proficiency in Python

- Solid grasp of data structures and algorithms.

Creativity and a realistic assessment of technology risks are also valued traits.

While generative AI models are multilingual, English remains predominant in training. Hence, prompt engineers require profound knowledge of vocabulary, nuance, and linguistics, as each word in a prompt influences outcomes significantly. Effective conveyance of context, instructions, or data to the AI model is important.

ChatGPT Prompt engineers must understand coding principles for generating code and possess knowledge of art history, photography, or film terms for image generation tasks. Those dealing with language context may benefit from familiarity with various narrative styles or literary theories.

Proficiency in generative AI tools and deep learning frameworks is essential. Advanced techniques such as zero-shot prompting, few-shot prompting, and chain-of-thought prompting are utilized to enhance model understanding and output quality.

Zero-shot prompting tests the model’s ability to generate relevant outputs for untrained tasks, while few-shot prompting provides context for learning, and chain-of-thought prompting facilitates step-by-step reasoning.

Effective Strategies to Become a Prompt Engineer

1. Grasp the fundamentals from NLP libraries and frameworks

To start with natural language processing (NLP), learn about fundamental concepts like tokenization, part-of-speech tagging, named entity recognition, and syntactic parsing. Explore NLP libraries like NLTK for versatile tools and datasets, spaCy for efficient processing with pre-trained models, and Transformers for access to advanced transformer models like ChatGPT. Practice tasks like text preprocessing, sentiment analysis, and language generation using these resources.

2. Understand and Experiment with ChatGPT and transformer models

To master transformer models like ChatGPT, understand and experiment into their architecture and mechanisms such as self-attention, encoder-decoder structure, and positional encoding. Utilize pre-trained ChatGPT models like GPT-2 or GPT-3 to experiment with various prompts, understanding the model’s text generation capabilities through hands-on practice. This practical approach enhances comprehension of ChatGPT’s behavior and capabilities.

3. Be aware of ethical considerations and bias in AI

Prompt engineers must prioritize ethical considerations, recognizing potential biases in AI models. They should adhere to responsible AI development practices, staying informed about bias mitigation techniques and guidelines. This proactive approach ensures the creation of fair and unbiased AI systems.

4. Stay current with the latest updates

To stay current in the constantly growing fields of NLP and AI, prompt engineers should engage with reputable sources, participate in conferences, and actively interact with the AI community. This ongoing involvement ensures awareness of the latest techniques, models, and research developments, particularly concerning ChatGPT.

5. Collaborate and contribute to real-world open-source projects

Active participation in open-source projects related to NLP and AI is essential for prompt engineers to collaborate, contribute, and gain practical experience. By applying their skills to real-world projects and addressing specific use cases with ChatGPT, prompt engineers can build a strong portfolio, showcase their expertise, and prepare themselves for impactful contributions in the AI and NLP domains.

You may also like to read: ChatGPT vs Copilot: Which And When To Use

What lies ahead in the Future of Prompt Engineering?

As we approach an AI-dominated era, prompt engineering emerges as a critical factor in defining how humans interact with AI systems. Despite being a young field, it shows significant promise and potential for shaping the future of human-AI interactions.

Research and Development

Since AI is consistent in making strides in ever industry vertical, ongoing research is pushing the boundaries of prompt engineering:

Adaptive prompting: Scientists are delving into methods for models to autonomously generate prompts, adjusting them based on context to minimize manual intervention.

Multimodal prompts: With the advent of AI models capable of processing text and images, prompt engineering now includes visual elements, broadening its scope.

Ethical prompting: Given the growing importance of AI ethics, efforts are directed towards creating prompts that prioritize fairness, transparency, and bias mitigation.

What is it’s Value and Relevance in the Long Term?

AI Prompt engineering is not a passing fad but a lasting necessity. As AI permeates various sectors, from healthcare to entertainment, effective communication between humans and machines becomes crucial. Prompt engineers will ensure accessibility, user-friendliness, and contextual relevance in AI applications.

Furthermore, as AI becomes more accessible to non-technical users, prompt engineers will adapt their role. They’ll focus on creating intuitive interfaces, crafting user-friendly prompts, and maintaining AI as a tool that enhances human capabilities.

That’s a Wrap!

In summary, prompt engineering is all about effective communication. We’ve learned that with ChatGPT, it’s more than just giving orders to a machine. It’s about having conversations that actually make sense and give us the results we want. To get good at this, you need to practice, learn as you go, and be a little creative. Prompt engineering is becoming a popular career choice as AI tools like ChatGPT gain popularity. For more information regarding the concepts of prompt engineering or AI&ML development services, our experts at DianApps are available to assist! Feel free to contact our experts.

Leave a Comment

Your email address will not be published. Required fields are marked *