“Artificial Intelligence is no longer the future, it’s already everywhere”.

That’s not just a bold statement, it’s the reality Google laid out at I/O 2025.

This year’s event wasn’t just about small updates and flashy features. It was a clear signal that we are stepping into a new era where AI isn’t just part of the product, it is the product.

Google has presented some of its most innovative updates yet, showing how deeply artificial intelligence is now embedded across everything from search and Android to productivity tools, hardware, and beyond.

At the center of it all was Gemini 2.5, Google’s smartest AI model yet. But that was just the beginning. Google also introduced Android XR for an immersive experience, showed off the real-time intelligence of Project Astra, and pushed creative boundaries with Veo 3.

If you are one of the others wondering what all of this means for developers, users, and the future of tech, you are at the right place. Here you will come across the biggest announcements and why they matter more than ever.

Why is AI the ‘Central’ Vision of Google Now?

At Google I/O 2025, it was crystal clear: AI is no longer an additional technology for Google; it has become an important part of everything they do. Even the Google CEO stated, “More intelligence is available, for everyone, everywhere”.

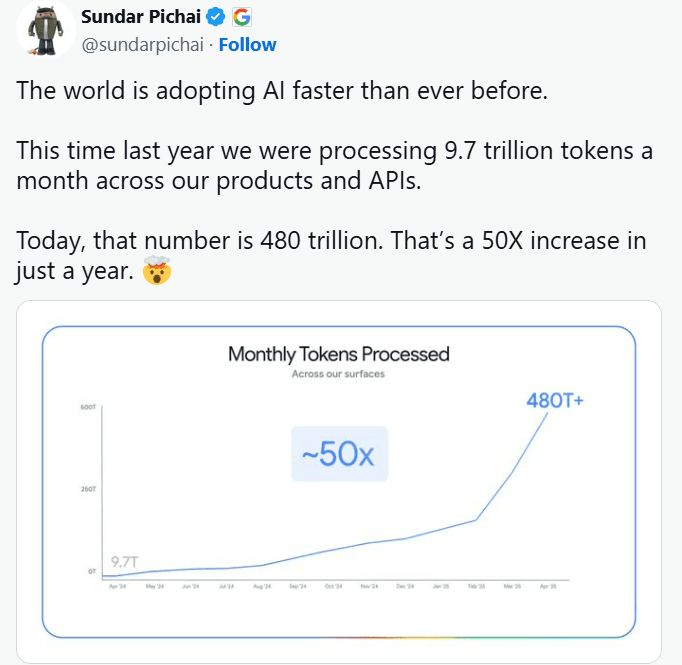

Think of it like this: AI helps everyone make everything smarter and more personal for you. It’s fantastic at understanding what you need, automating tasks that are complicated or time-consuming, and picking out key information from lengthy content (saving manpower for something more important). The use of AI has been continuously growing since last May, with a 50X increase in just one year.

This means your Google Search becomes more helpful, apps feel more natural, and future tools like Project Astra can even anticipate what you’ll do next. Basically, Google sees AI as the key to making technology work better, smarter, and more seamlessly for everyone.

What’s the latest Update on Google I/O 2025?

Deddy Das, a former Senior Software Engineer, stated that this was the best Google I/O event. Let’s take a look at the major innovations and updates from this event.

Gemini 2.5 Pro and Multimodal Intelligence

Google dropped Gemini 2.5 Pro, a beast of a model focused on deeper reasoning, coding, and logic.

It can handle massive token contexts (up to 2 million) and now supports Deep Think Mode for complex tasks like debugging or long-form writing.

Also new? Gemini Flash 2, a lightweight version designed for speed and cost-efficiency, is perfect for on-device and real-time use.

Multimodal capabilities got a major boost, too. Gemini now understands images, audio, video, and screen context at once.

AI in Google Search

Your search just got smarter and more human-centric.

AI overviews are rolling out to all U.S. users, providing concise, Gemini-generated answers above traditional results.

Now you can ask complex, multi-part questions with voice, images, or a mix of both. Need help planning a vacation? Just describe it in natural language. Gemini will build an itinerary, suggest flights, and recommend hotels.

Search can now access your personal context, like past searches, emails, and even photos, securely and privately.

Shopping also got a glow-up: AI can help you virtually try on clothes or suggest outfits based on your body type.

Project Astra and Gemini Live

Real-time AI is now next level

With Gemini Live, you can now talk to Gemini with your voice. No more typing. Just speak, and it responds like a human assistant.

Thanks to Project Astra, it also sees what you see. Point your camera at something, and Astra will describe, analyze, or even explain it.

There’s also an early version of Agent Mode, where Gemini performs tasks like trip planning, comparing laptops, or even summarizing your notes across apps.

The goal? Build AI that doesn’t just respond but acts and reasons like a helpful digital agent.

Generative Media Tools: Imagen 4, Veo 3 & Flow

Creators, take note. Google’s new tools are leveling the content game.

- Imagen 4: Create ultra-realistic images with simple prompts.

- Veo: Generate full HD videos with scene shifts, camera motion, and music.

- Google Flow: A video editing assistant that simplifies storytelling.

Musicians got a treat with Lyria 2, and tools like SynthID are ensuring everything AI-generated is ethically watermarked.

Android & XR Ecosystem Innovations

Get ready for next-gen wearables and immersive experiences.

Google announced a new Android XR platform built for smart AR glasses, in partnership with Gentle Monster and Warby Parker.

Real-time translation, overlay navigation, and contextual recognition are now standard features.

Also introduced: Project Moohan, a collaboration with Samsung and Qualcomm, launching an XR headset powered by Gemini.

Developers, rejoice! New tools like Image-to-Code and Gemini Journeys are now baked into Android Studio.

Next-Gen Developer Tools

From idea to deployment, AI has become your coding partner.

Google AI Studio can now generate full apps and handle complex logic tasks.

Colab goes beyond auto-complete. It’s now a collaborative agent that plans, debugs, and even optimizes your code structure.

Meet Jules, a dev agent who manages JIRA tasks and tracks GitHub changes. And don’t miss Stitch, which turns UI sketches into production-ready code.

AI Infrastructure and Open Models

Behind all this innovation is serious hardware muscle.

Google revealed Trillium TPUs (6th Gen), faster, more efficient chips that train the most complex models at scale.

Open models got an upgrade, too:

- Gemma 3n: For local, high-performance use.

- SignGemma: Interprets sign language.

- MedGemma: Built for medical imaging and healthcare analytics.

Google Meet Speech Translation

Google I/O introduced a game-changing update, real-time speech translation in Google Meet.

Powered by Google AI, this feature translates conversations almost instantly, helping people communicate effortlessly across different languages.

What makes it unique is the ability to retain the tone, quality, and expressiveness of the original voice.

It’s a huge step toward breaking language barriers and making virtual meetings feel more human and connected.

Responsible AI and Ethics

Google didn’t just drop tools, it doubled down on ethics.

Every AI-generated image, video, and audio will carry SynthID watermarks, invisible to humans, traceable by machines.

Plus, Google uses AI red-teaming to expose edge cases and biases before release.

As Sundar Pichai said, “We’re committed to building AI that earns your trust.”

What Do These Updates Mean for Developers, Users, and the AI Future?

Updates from the Google I/O event play a crucial role for developers, users, and the future of AI. Let’s explore how these updates benefit each of them.

For Developers:

AI isn’t just assistive anymore, it’s collaborative.

From auto-generating apps to real-time debugging, tools like Jules, Stitch, and Gemini Journeys are reducing development complexity and enhancing creativity.

For Users:

Daily life is becoming more seamless, intuitive, and personalized.

Imagine planning a trip, managing your inbox, or creating a music video, all with a simple prompt. That’s the power shift Gemini is delivering.

For the AI Future:

This is the era of agentic computing.

AI is no longer reactive, it’s proactive. It sees, hears, and acts with intent.

Whether you’re coding, shopping, driving, or storytelling, AI is stepping in as your partner, not just a tool.

Final Words: Is Google I/O 2025 the Most AI-Centric Yet?

On a final note, Google I/O 2025 has made one thing crystal clear that AI has gained major popularity for driving innovation. Whether you’re a startup or an entrepreneur, the tools and insights from this event are set to transform how we build and interact with technology.

With powerful APIs, coding agents, and multimodal models, developers now have everything they need to accelerate delivery and scale smartly. For businesses offering custom software development services, it’s time to adopt these updates to stay ahead of the curve. One thing’s for sure, AI has now become a future for developers.

Leave a Comment

Your email address will not be published. Required fields are marked *