AI Overview: Claude Opus 4.6

|

Claude Opus 4.6 Isn’t Just an Upgrade. It’s a Strategic Shift.

AI model releases are frequent. Genuine inflection points are rare. Claude Opus 4.6 lands firmly in the second category.

Anthropic’s latest flagship model is engineered for something the industry has been inching toward for years: AI that can sustain deep reasoning, operate across massive context, and coordinate multi-agent workflows without collapsing under complexity. This isn’t about marginal gains in chatbot fluency.

It’s about enabling AI to handle enterprise-scale cognitive load, long codebases, dense legal documents, multi-step research, and autonomous task orchestration.

The numbers don't lie: In the first 48 hours post-launch, Thomson Reuters plummeted 15.83%, LegalZoom dropped 20%, and the broader Nasdaq suffered its worst two-day tumble since April.

The JPMorgan U.S. software index fell 7% in a single day. Investors aren't just worried. they're repositioning for a fundamentally different future.

What makes Opus 4.6 materially different is the combination of three capabilities:

- Extended context window (up to ~1,000,000 tokens in beta): allowing the model to ingest and reason over volumes of information that previously required aggressive chunking and fragile retrieval pipelines

- Improved reasoning & planning stability: better multi-step execution, fewer derailments in long workflows

- Agent-centric architecture: including support for Agent Teams, where multiple AI subagents collaborate in parallel on complex tasks

In practical terms, this means fewer context resets, more coherent long-form outputs, stronger large-scale coding assistance, and a measurable reduction in human micromanagement during AI-assisted workflows.

The timing is equally important. Enterprises are moving from experimentation to AI operationalization, where cost efficiency, reliability, controllability, and safety matter more than novelty. Opus 4.6 is explicitly positioned for that phase: productivity, automation, and real work.

In this deep dive, we’ll break down:

- What Claude Opus 4.6 actually is

- How it evolved from previous Claude Opus versions

- Benchmarks vs GPT-5.2 and other frontier models

- How Agent Teams work and why they matter

- Pricing and access considerations

- Real-world coding and enterprise use cases

- Limitations, failure modes, and “overthinking” behaviors

- What AI experts are saying

If you’re evaluating next-gen AI models for productivity, development, automation, or strategic adoption, this isn’t optional reading. It’s due diligence.

Let’s start with where Opus 4.6 fits in the Claude lineage.

Claude Opus Evolution: From Powerful Model to Enterprise-Grade AI Engine

Before analyzing why Claude Opus 4.6 is considered a leap forward, we need context. Not marketing context, capability context. Each Opus iteration didn’t just add features; it tightened reasoning reliability, expanded memory limits, and pushed Claude closer to long-running, agent-style work.

Here’s the progression that matters.

Claude Opus 4.0: The Intelligence Foundation

What defined it:

- Positioned as Anthropic’s high-end reasoning model

- Strong performance in structured thinking, analysis, and long-form writing

- Clear emphasis on safety-aligned responses

Where it excelled:

- Complex Q&A

- Research synthesis

- Early enterprise experimentation

Where friction appeared:

- Long workflows could drift

- Context limits still constrained very large documents/codebases

- Multi-step autonomy required heavy prompting

Bonus Read: Prompt Engineering for ChatGPT

Opus 4.0 proved Claude could compete at the frontier, but sustained agentic work was still emerging.

Claude Opus 4.1: Stability & Reasoning Refinement

Key improvements:

- Better logical consistency

- Reduced hallucination frequency in technical tasks

- Improved instruction following

Impact:

- More dependable for professional use

- Noticeable gains in coding comprehension

- Still bounded by context window constraints

All-in-all, less flashy, more important, 4.1 quietly improved trustworthiness.

Claude Opus 4.5: The Capability Expansion Phase

This version marked a visible shift toward heavy-duty workloads.

Major upgrades:

- Stronger coding & debugging performance

- Better handling of nuanced reasoning tasks

- Improved latency vs depth balance

Why it mattered:

- Developers began using Claude for real production workflows

- Enterprises started exploring Claude for document analysis & automation

- Claude became a serious GPT alternative, not just a competitor

Remaining bottlenecks:

- Very large context scenarios still required chunking/RAG gymnastics

- Agent orchestration still limited

Opus 4.5 moved Claude from “capable assistant” to credible work partner.

Claude Opus 4.6: The Strategic Inflection Point

Opus 4.6 isn’t just “better.” It redefines operational boundaries.

Breakthrough shifts:

1. Massive Context Window (Up to ~1M Tokens in Beta)

- Enables reasoning across entire codebases

- Supports long contracts, research archives, technical documentation

- Reduces fragmentation errors from excessive chunking

2. Enhanced Reasoning & Planning Durability

- More coherent multi-step workflows

- Fewer logic collapses in long sessions

- Stronger structured problem solving

3. Agent-First Capabilities (Agent Teams)

- Multiple AI agents collaborate in parallel

- Suitable for large engineering/research tasks

- Moves Claude into orchestration territory

4. Effort Controls & Adaptive Thinking

- Tunable depth vs speed vs cost

- Prevents unnecessary compute burn

- Improves enterprise cost predictability

5. Extended Output Capacity

- Handles long technical outputs (design docs, code modules, reports)

What Actually Changed Across Versions?

Why This Evolution Matters?

Most AI upgrades deliver incremental intelligence. Opus 4.6 delivers operational expansion:

- Larger memory horizon

- Longer autonomous workflows

- Parallelized AI collaboration

- More controllable compute economics

That combination changes how companies design:

- AI copilots

- Dev productivity systems

- Document intelligence pipelines

- Autonomous task agents

- Research automation

What Exactly Is Claude Opus 4.6?

Claude Opus 4.6 is Anthropic’s most advanced flagship language model, designed for high-complexity reasoning, enterprise workflows, large-scale coding, and agent-driven task execution.

It represents the top tier of Anthropic’s Claude family, where the emphasis is placed on capability, reliability, and depth rather than lightweight speed.

This model is best understood not as a chatbot iteration, but as a general-purpose cognitive engine intended to operate across long contexts, multi-step objectives, and professional workloads.

Core Model Identity

Claude Opus 4.6 is:

- A frontier-class large language model (LLM)

- Optimized for deep reasoning and structured problem solving

- Built to sustain performance across extremely large inputs

- Tunable for cost, latency, and cognitive effort

- Designed for agentic and semi-autonomous workflows

Within Anthropic’s lineup, the Opus series consistently targets maximum intelligence and analytical strength, positioned above Sonnet (balanced) and Haiku (fast/lightweight).

Context Window Expansion

One of the defining technical shifts in Opus 4.6 is its extended context window, supporting up to approximately 1,000,000 tokens in beta environments.

Why this matters:

- Enables analysis of very large documents without aggressive chunking

- Allows reasoning across extensive codebases in a single session

- Reduces retrieval fragmentation in RAG pipelines

- Improves coherence in long, multi-document workflows

- Minimizes “lost context” failures common in smaller-window models

In practical terms, this changes how teams design AI systems. Instead of engineering around memory limits, architects can focus on reasoning accuracy and workflow design.

Reasoning and Planning Improvements

Claude Opus 4.6 introduces refinements aimed at improving durability of reasoning across long sessions. These include:

- Greater logical consistency

- Improved multi-step planning stability

- Stronger instruction adherence

- Better self-correction patterns

- Reduced mid-task drift

These upgrades are particularly important for tasks such as:

- Complex coding and debugging

- Long-form technical writing

- Research synthesis

- Legal or financial analysis

- Agent-mediated workflows

Earlier models across the industry often demonstrated strong intelligence but degraded performance in extended reasoning chains. Opus 4.6 targets that weakness directly.

Agent-First Capabilities

Claude Opus 4.6 is explicitly optimized for agentic usage patterns, where the model functions as an active operator rather than a passive responder.

This includes support for Agent Teams, a system in which multiple Claude instances collaborate on partitioned subtasks.

Key characteristics:

- Parallelized problem solving

- Task specialization across subagents

- Coordinated synthesis of outputs

- Cross-verification of reasoning

- Improved scalability for large objectives

This architecture is especially relevant for:

- Large engineering projects

- Codebase audits

- Research decomposition

- Multi-document analysis

- Autonomous workflow execution

Adaptive Thinking and Effort Controls

Opus 4.6 incorporates mechanisms allowing developers to regulate computational intensity.

Effort parameter:

Enables tuning between speed, reasoning depth, and cost.

Adaptive thinking:

Allows the model to dynamically adjust cognitive effort depending on task complexity.

Enterprise implications:

- More predictable cost structures

- Reduced over-computation

- Workload-specific optimization

- Better latency management

Output Capacity

Claude Opus 4.6 supports significantly expanded output limits (e.g., long-form responses approaching ~128K tokens).

This enables:

- Extended technical documentation

- Multi-file code generation

- Detailed analytical reports

- Long structured reasoning outputs

Coding and Technical Workloads

Claude Opus 4.6 is heavily optimized for software-related tasks:

- Code generation

- Debugging and refactoring

- System design reasoning

- Dependency analysis

- Large repository comprehension

Frequently cited strengths include:

- Better long-range code coherence

- Stronger reasoning across architectural layers

- Improved bug identification patterns

- More consistent multi-file logic handling

Safety and Alignment Framework

Anthropic continues its emphasis on Constitutional AI alignment and model safety transparency. Opus 4.6’s System Card outlines:

- Risk assessments

- Stress-testing scenarios

- Observed failure modes

- Agent behavior evaluations

This is particularly relevant as models become more autonomous and capable of long-running actions.

Expert Perspective

Technology analysts reviewing Claude Opus 4.6 have highlighted its significance for long-context and reasoning-heavy workloads.

For example, evaluations comparing Claude Opus 4.6 with competing frontier models note that its extended context window and reasoning stability make it particularly strong in coding, document analysis, and multi-step problem solving, while trade-offs may appear in latency-sensitive scenarios depending on configuration (Tom’s Guide benchmark comparisons).

This reflects a broader industry observation: Opus 4.6 is not merely competing on conversational quality, but on operational intelligence under complexity.

The Numbers That Matter: Performance Breakdown

Benchmarks are boring, until they're not. When a model jumps from 37.6% to 68.8% on the ARC AGI 2 benchmark (nearly doubling its score), people pay attention. When it crushes GPT-5.2 by 144 Elo points on real-world enterprise tasks, investors take notice.

Here's where Opus 4.6 absolutely dominates the competition:

Key takeway:

Opus 4.6 wins decisively on agentic workflows, coding, and enterprise tasks. GPT-5.2 edges ahead on pure mathematics. Gemini 3 Pro leads on massive context and multimodal reasoning.

Also read: Amazon Bedrock Vs ChatGPT

Real-world impact: Beyond the benchmarks

Benchmarks are one thing. Real companies betting their workflows on this technology? That's where it gets interesting. And the testimonials coming in are nothing short of remarkable.

"This no longer feels like a tool, but a truly capable collaborator." – Sarah Sachs, Head of AI at Notion

Let's talk specifics. Rakuten, the Japanese e-commerce giant, deployed Opus 4.6 across their 50-person engineering organization. In a single day, the AI autonomously closed 13 issues and correctly assigned 12 more to the appropriate team members. No human intervention.

The Norway Sovereign Wealth Fund, one of the world's largest investors, ran Opus 4.6 through 40 cybersecurity investigations. It outperformed the previous Claude 4.5 model in 38 out of 40 cases. These aren't toy problems; these are real investigations protecting billions in assets.

Harvey, the legal AI company, achieved a 90.2% score on the BigLaw Bench with 40% perfect scores. That's the kind of performance that makes senior partners at white-shoe law firms start sweating about their $800/hour billing rates.

Impact by the numbers:

- 90.2% - Legal accuracy on complex cases

- 80.8% - Real code issues solved automatically

- 5x - Context window expansion over previous models

The $285 billion question: The saasapocalypse

Now we arrive at the elephant in the room, or rather, the $285 billion crater in the market. Why did investors panic so spectacularly?

Because Opus 4.6 doesn't just compete with enterprise software. It threatens to replace it entirely.

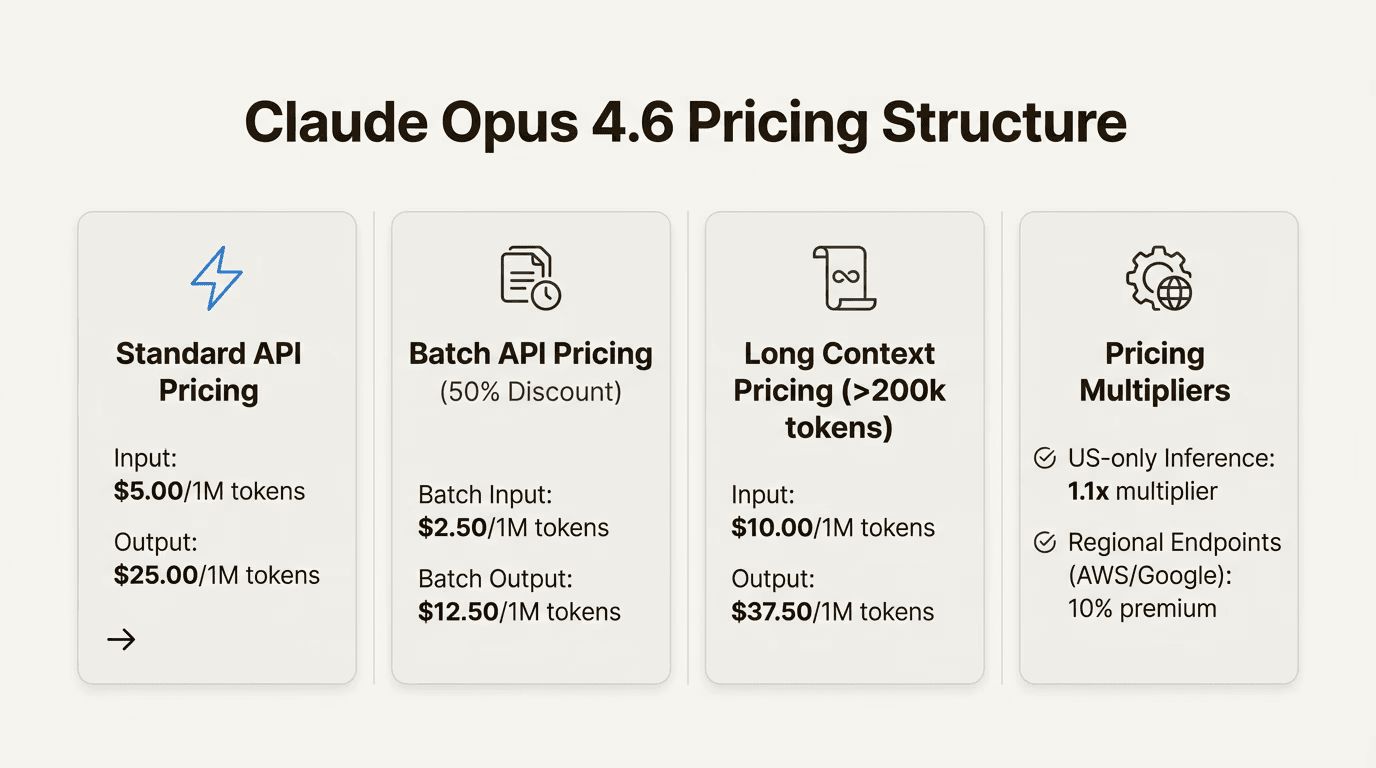

Consider the math: A typical enterprise Salesforce deployment costs around $350,000 annually. Claude Opus 4.6's API pricing? $5 per million input tokens, $25 per million output tokens. Even with heavy usage, you're talking about a cost reduction of 90-95%.

The disposable software revolution: Why pay for a permanent CRM when you can have AI create a custom one for your specific workflow, use it for a project, and discard it? The "agent teams" feature means complex, multi-component applications can be built on-demand for pennies on the dollar.

The market's reaction was swift and brutal. Here's the carnage:

Stock market impact:

- Thomson Reuters: -15.83% (one day)

- LegalZoom: -20% (one day)

- FactSet: -9.1%

- S&P Global: -4.2%

- Salesforce, Workday, SAP: 3-6% drops continuing multi-week declines

- JPMorgan U.S. software index: -7% in one day, -18% year-to-date

The Bull Vs. Bear Debate

Wedbush analyst Dan Ives calls the panic "overblown," while Gartner suggests these concerns are premature. But even the bulls acknowledge: something fundamental has shifted.

What users are actually saying?

Beyond the executive quotes and analyst reports, what's happening in the trenches? The Reddit developer communities and Discord channels paint a more nuanced picture.

"Excels on the hardest problems. Greater persistence, stronger code review, ability to stay on long tasks where other models give up.", Michael Truell, Co-founder of Cursor

The good:

- Coding prowess: Developers report unprecedented ability to handle massive codebases and multi-file refactoring

- Persistence: Unlike earlier models, Opus 4.6 doesn't give up and suggest you "try a different approach"

- First-try accuracy: Production-ready outputs without multiple revision rounds

- Complex reasoning: Can hold architectural decisions in mind across thousands of lines of code

The not-so-good:

- "Opus 4.6 lobotomized": Complaints about writing quality regression for creative/technical documentation

- Token consumption crisis: Max subscribers hitting weekly quotas in 8-12 hours instead of days

- Jagged performance: Brilliant on some tasks, inconsistent on others, high variance

- Verbosity: Generates 11M tokens on average vs. 3.8M for competitors on similar benchmarks

- User consensus: Use Opus 4.6 for complex coding and enterprise workflows. Stick with 4.5 or competitors for creative writing and quick tasks.

The technical deep dive: What's under the hood?

For the technically inclined, let's dig into what makes Opus 4.6 tick:

1. The million-token context revolution

This isn't just "bigger is better." The model achieves 76% on the MRCR v2 benchmark (Massive Retrieval and Complex Reasoning), compared to Sonnet 4.5's 18.5%. That's the difference between theoretical capability and practical usability. You can actually feed it an entire enterprise codebase and have it understand the relationships between components.

2. Agent teams architecture

Each agent in a team maintains its own 1M context window and can communicate peer-to-peer. This isn't just parallel processing, it's genuine collaborative intelligence. One agent handles backend architecture while another focuses on frontend components, exchanging context as needed.

3. Adaptive thinking mechanism

Previous models had a binary choice: think deeply or respond quickly. Opus 4.6 operates on a spectrum with four effort levels (low, medium, high, max). The model autonomously decides when deeper reasoning helps and when it's overkill. This significantly reduces token waste on simple queries while maintaining performance on complex ones.

4. Context compaction technology

Server-side summarization of older conversation context enables practically infinite conversations. The system automatically identifies less-relevant portions of context and compacts them without losing critical information threads. This is the feature that enables those "three-day coding projects" developers are raving about.

Integration ecosystem: Beyond the API

Anthropic isn't just releasing a model, they're building an ecosystem. Two integration announcements flew somewhat under the radar but represent significant strategic moves:

Claude in powerpoint (research preview)

Direct integration into Microsoft's presentation software. The AI matches existing templates, colors, and fonts, creating slides that look like a human made them, not a robot. This is a direct shot across the bow at Microsoft Copilot, which ironically runs on OpenAI's technology.

Enhanced claude in excel

Better at long-running tasks, pivot tables, complex chart modifications, and finance-grade formatting. The system can now handle multi-hour spreadsheet operations without losing context or making errors that compound.

These integrations matter because they meet users where they already work, rather than forcing them to learn new tools or workflows.

The honest assessment: drawbacks and limitations

Let's be clear-eyed about this. Opus 4.6 isn't perfect, and depending on your use case, it might not even be the right choice. Here's what's genuinely problematic:

Cost & token consumption

The adaptive thinking feature, while powerful, burns through tokens at roughly 5x the rate of Opus 4.5. Users with $200/month Max subscriptions report hitting their weekly limits in less than 12 hours of moderate use. One user posted: "Not even half a day and already at 20% weekly usage. This is almost unusable for daily work."

Writing quality regression

Multiple independent reports confirm that Opus 4.6 produces lower-quality creative and technical writing compared to 4.5. The model seems optimized for structured, logic-heavy tasks at the expense of prose quality. If you're writing marketing copy or blog posts, you might want to stick with earlier versions.

Access restrictions

The headline features aren't universally available yet:

- Million-token context: Beta, API tier 4+ only (not yet for claude.ai Max subscribers)

- Agent Teams: Research preview with limited access

- PowerPoint integration: Research preview only

The "JAGGED" performance problem

High variance means the model might deliver a perfect solution on attempt one or struggle with something simple on attempt five. It can hallucinate, state speculative answers confidently, or report success when it actually failed. This unpredictability makes it challenging for production workflows where consistency matters as much as capability.

The competitive landscape: where do we go from here?

Something fascinating happened on February 5th: both Anthropic and OpenAI released major model updates on the same day. This wasn't coincidence, it was strategic coordination, ensuring neither company could claim a decisive advantage for more than a few hours.

We've entered what industry insiders call the "post-benchmark era." When multiple models score 80%+ on real-world coding tasks and 90%+ on legal reasoning, the differences become more about fit than capability. It's like comparing elite athletes, you're splitting hairs at that level.

"Developer skill in 'managing agents' now matters more than model choice. Most power users are running multiple models for different tasks." Industry consensus emerging from developer communities

The practical reality for most organizations:

- Complex coding projects → Opus 4.6 or GPT-5.3 Codex

- Abstract mathematics → GPT-5.2

- Massive multimodal reasoning → Gemini 3 Pro

- Enterprise knowledge work → Opus 4.6

- Creative writing → Claude Sonnet 4.5 or GPT-4.7

Also read: How to use ChatGPT 5 for free!

What this means for you?

So where does this leave us? Let's break it down by stakeholder:

if you're a developer:

Opus 4.6 is probably worth the upgrade for complex projects. Expect to hit token limits faster, but the quality improvements on difficult reasoning tasks are real. Consider maintaining access to multiple models and routing tasks based on complexity.

If you're an enterprise decision-maker:

The economics are too compelling to ignore, but don't panic-migrate your entire stack. Start with pilot projects in non-critical areas. The technology is mature enough for production use in specific domains (coding, legal research, document processing) but still jagged enough that you'll want human oversight.

If you work in saas:

Time to get serious about your AI strategy. The "wrap AI around our existing product" approach won't cut it. You need to be thinking about how to rebuild your product AS an AI-native experience, or risk becoming the Blockbuster of enterprise software.

If you're an investor:

The market correction was real, but may have been overdone in the short term. Enterprise inertia is a powerful force. However, the long-term trajectory is clear: software that can't justify its premium over AI-powered alternatives will struggle. Position accordingly.

Final thoughts: Beyond the hype

Here's what gets lost in the benchmark wars and stock market drama: we're witnessing the emergence of genuinely autonomous artificial intelligence that can perform knowledge work at or above human-expert levels in specific domains. That's not hype, that's demonstrable reality tested by thousands of developers and enterprises over the past week.

Yes, Opus 4.6 has rough edges. Yes, it's expensive to run at scale. Yes, the writing quality regression is real. But step back and look at the big picture: a system that can autonomously close GitHub issues, draft legal briefs, build entire applications, and reason through complex multi-step problems with minimal human guidance.

Five years ago, that would have been science fiction. Today, it's $5 per million tokens.

The SaaSapocalypse narrative might be overblown in the near term. Enterprise software companies aren't going to disappear overnight. But the fundamental value proposition of software, "we built something complex so you don't have to,” is being challenged by AI that can build custom solutions on demand.

That's not just a game-changer. It's a game-ender for traditional models.

The question isn't whether AI will transform knowledge work. That transformation is already happening. The question is: how quickly will your organization adapt?

The bottom line:

Claude Opus 4.6 isn't just a better AI model, it's a glimpse of how work itself will fundamentally change over the next 18-24 months. Whether that excites or terrifies you probably depends on which side of the disruption you're standing on.

The race is on. The technology is here. The only question left is: what are you going to build with it?

Leave a Comment

Your email address will not be published. Required fields are marked *